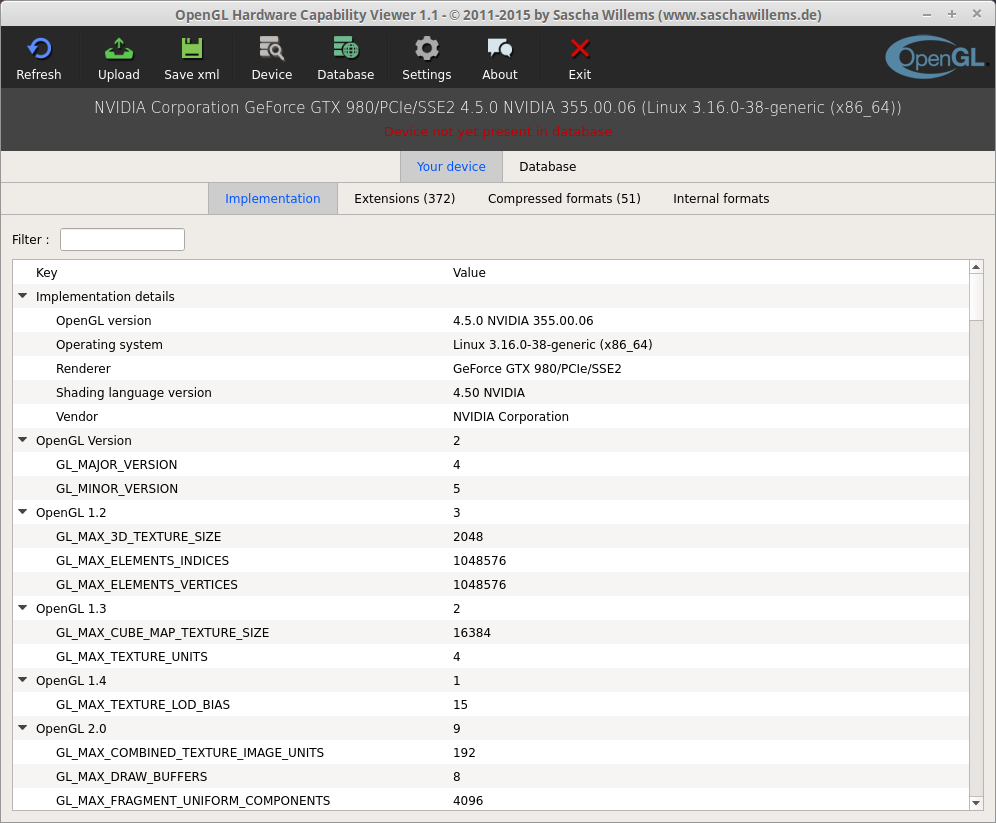

Fusion now provides hardware accelerated DirectX 11 and OpenGL 4.1 capabilities to Windows and Linux virtual machines, with added support for eGPU devices. Now allowing for up to 8GB of vRAM per-VM, and employing a special ‘sandbox’ process to render graphics, Fusion leads the way in virtual machine graphics performance, accuracy and security. Download OpenGL Extensions Viewer for macOS 10.9 or later and enjoy it on your Mac. Many OpenGL extensions, as well as extensions to related APIs like GLU, GLX, and WGL, have been defined by vendors and groups of vendors.

This page provides links to both general release drivers that support OpenGL 4.6, and developer beta drivers that support upcoming OpenGL features.

Release Driver Downloads

OpenGL for Macintosh enables your computer to display three-dimensional graphics using applications designed to take advantage of OpenGL. Mac Games are more real, more powerful and more fun.

OpenGL 4.6 support is available for Windows and Linux in our general release drivers available here:

Opengl 1.0 Download Mac Os

Windows

Linux

Developer Beta Driver Downloads

Windows driver version 426.02 and Linux driver version 418.52.18 provide new features for OpenGL developers to test their upcoming OpenGL applications.

Windows 426.02

Linux 418.52.18

OpenGL Beta Release Notes

NVIDIA provides full OpenGL 4.6 support and functionality on NVIDIA GeForce and Quadro graphics card with one of the following Turing, Volta, Pascal, Maxwell (first or second generation) or Kepler based GPUs:

- TITAN: NVIDIA TITAN RTX

- GeForce RTX: GeForce RTX 2080 Ti, GeForce RTX 2080, GeForce RTX 2070, GeForce RTX 2060

- GeForce GTX: GeForce GTX 1660 Ti, GeForce GTX 1660, GeForce GTX 1650, GeForce MX250, GeForce MX230

- Quadro: Quadro RTX 8000, Quadro RTX 6000, Quadro RTX 5000, Quadro RTX 4000, Quadro RTX 3000, Quadro T2000, Quadro T1000

- TITAN: NVIDIA TITAN V

- Quadro: Quadro GV100

- TITAN: NVIDIA TITAN Xp, NVIDIA TITAN X (Pascal)

- GeForce: GeForce GTX 1080 Ti, GeForce GTX 1080, GeForce GTX 1070 Ti, GeForce GTX 1070, GeForce GTX 1060, GeForce GTX 1050 Ti, GeForce GTX 1050, GeForce GT 1030, GeForce MX150,

- Quadro: Quadro GP100, Quadro P6000, Quadro P5200, Quadro P5000, Quadro P4200, Quadro P4000, Quadro P3200, Quadro P3000, Quadro P2200, Quadro P2000, Quadro P1000, Quadro P620, Quadro P600, Quadro P520, Quadro P500, Quadro P400

- TITAN: GeForce GTX TITAN X

- GeForce: GeForce GTX 980 Ti, GeForce GTX 980, GeForce GTX 980M, GeForce GTX 970, GeForce GTX 970M, GeForce GTX 965M, GeForce GTX 960, GeForce GTX 950,

- Quadro: Quadro M6000 24GB, Quadro M6000, Quadro M5500, Quadro M5000, Quadro M5000M, Quadro M4000, Quadro M4000M, Quadro M3000M, Quadro M2200, Quadro M2000

- GeForce: GeForce GTX 960M, GeForce GTX 950M, GeForce 945M, GeForce 940MX, GeForce 930MX, GeForce 920MX, GeForce 940M, GeForce 930M, GeForce GTX 860M, GeForce GTX 850M, GeForce 845M, GeForce 840M, GeForce 830M, GeForce GTX 750 Ti, GeForce GTX 750, GeForce GTX 745, GeForce MX130

- Quadro: Quadro M2000M, Quadro M1000M, Quadro M600M, Quadro M500M, Quadro M1200, Quadro M620, Quadro M520, Quadro K2200M, Quadro K620M

- TITAN: GeForce GTX TITAN, GeForce GTX TITAN Black, GeForce GTX TITAN Z

- GeForce: GTX 780 Ti, GeForce GTX 780, GeForce GTX 770, GeForce GTX 760, GeForce GTX 760 Ti (OEM), GeForce GT 740, GeForce GT 730, GeForce GT 720, GeForce GT 710, GeForce GTX 690, GeForce GTX 680, GeForce GTX 670, GeForce GTX 660 Ti, GeForce GTX 660, GeForce GTX 650 Ti BOOST, GeForce GTX 650 Ti, GeForce GTX 650, GeForce GTX 645, GeForce GT 640, GeForce GT 635, GeForce GT 630, GeForce MX110

- Quadro: Quadro K6000, Quadro K5200, Quadro K5000, Quadro K4000, Quadro K4200, Quadro K2200, Quadro K2000, Quadro K2000D, Quadro K1200, Quadro K620, Quadro K600, Quadro K420, Quadro 410

Turing GPU Architecture

Volta GPU Architecture

Pascal GPU Architecture

Maxwell 2 GPU Architecture

Maxwell 1 GPU Architecture

Kepler GPU Architecture

The OpenGL 4.6 specifications can be downloaded from http://www.opengl.org/registry/.

For any bugs or issues, please file a bug through the developer website: https://devtalk.nvidia.com/

Turing Extensions for OpenGL

GPUs with the new Turing architecture have many new OpenGL extensions giving developers access to new features.

Opengl 1.0

Release Updates

July 29th, 2019 - Windows 426.02, Linux 418.52.18

- New:

- Release Notes

- The Release Notes for the CUDA Toolkit.

- EULA

- The End User License Agreements for the NVIDIA CUDA Toolkit, the NVIDIA CUDA Samples, the NVIDIA Display Driver, and NVIDIA NSight (Visual Studio Edition).

Installation Guides

- Quick Start Guide

- This guide provides the minimal first-steps instructions for installation and verifying CUDA on a standard system.

- Installation Guide Windows

- This guide discusses how to install and check for correct operation of the CUDA Development Tools on Microsoft Windows systems.

- Installation Guide Linux

- This guide discusses how to install and check for correct operation of the CUDA Development Tools on GNU/Linux systems.

Programming Guides

- Programming Guide

- This guide provides a detailed discussion of the CUDA programming model and programming interface. It then describes the hardware implementation, and provides guidance on how to achieve maximum performance. The appendices include a list of all CUDA-enabled devices, detailed description of all extensions to the C++ language, listings of supported mathematical functions, C++ features supported in host and device code, details on texture fetching, technical specifications of various devices, and concludes by introducing the low-level driver API.

- Best Practices Guide

- This guide presents established parallelization and optimization techniques and explains coding metaphors and idioms that can greatly simplify programming for CUDA-capable GPU architectures. The intent is to provide guidelines for obtaining the best performance from NVIDIA GPUs using the CUDA Toolkit.

- Maxwell Compatibility Guide

- This application note is intended to help developers ensure that their NVIDIA CUDA applications will run properly on GPUs based on the NVIDIA Maxwell Architecture. This document provides guidance to ensure that your software applications are compatible with Maxwell.

- Pascal Compatibility Guide

- This application note is intended to help developers ensure that their NVIDIA CUDA applications will run properly on GPUs based on the NVIDIA Pascal Architecture. This document provides guidance to ensure that your software applications are compatible with Pascal.

- Volta Compatibility Guide

- This application note is intended to help developers ensure that their NVIDIA CUDA applications will run properly on GPUs based on the NVIDIA Volta Architecture. This document provides guidance to ensure that your software applications are compatible with Volta.

- Turing Compatibility Guide

- This application note is intended to help developers ensure that their NVIDIA CUDA applications will run properly on GPUs based on the NVIDIA Turing Architecture. This document provides guidance to ensure that your software applications are compatible with Turing.

- NVIDIA Ampere GPU Architecture Compatibility Guide

- This application note is intended to help developers ensure that their NVIDIA CUDA applications will run properly on GPUs based on the NVIDIA Ampere GPU Architecture. This document provides guidance to ensure that your software applications are compatible with NVIDIA Ampere GPU architecture.

- Kepler Tuning Guide

- Kepler is NVIDIA's 3rd-generation architecture for CUDA compute applications. Applications that follow the best practices for the Fermi architecture should typically see speedups on the Kepler architecture without any code changes. This guide summarizes the ways that applications can be fine-tuned to gain additional speedups by leveraging Kepler architectural features.

- Maxwell Tuning Guide

- Maxwell is NVIDIA's 4th-generation architecture for CUDA compute applications. Applications that follow the best practices for the Kepler architecture should typically see speedups on the Maxwell architecture without any code changes. This guide summarizes the ways that applications can be fine-tuned to gain additional speedups by leveraging Maxwell architectural features.

- Pascal Tuning Guide

- Pascal is NVIDIA's 5th-generation architecture for CUDA compute applications. Applications that follow the best practices for the Maxwell architecture should typically see speedups on the Pascal architecture without any code changes. This guide summarizes the ways that applications can be fine-tuned to gain additional speedups by leveraging Pascal architectural features.

- Volta Tuning Guide

- Volta is NVIDIA's 6th-generation architecture for CUDA compute applications. Applications that follow the best practices for the Pascal architecture should typically see speedups on the Volta architecture without any code changes. This guide summarizes the ways that applications can be fine-tuned to gain additional speedups by leveraging Volta architectural features.

- Turing Tuning Guide

- Turing is NVIDIA's 7th-generation architecture for CUDA compute applications. Applications that follow the best practices for the Pascal architecture should typically see speedups on the Turing architecture without any code changes. This guide summarizes the ways that applications can be fine-tuned to gain additional speedups by leveraging Turing architectural features.

- NVIDIA Ampere GPU Architecture Tuning Guide

- NVIDIA Ampere GPU Architecture is NVIDIA's 8th-generation architecture for CUDA compute applications. Applications that follow the best practices for the NVIDIA Volta architecture should typically see speedups on the NVIDIA Ampere GPU Architecture without any code changes. This guide summarizes the ways that applications can be fine-tuned to gain additional speedups by leveraging NVIDIA Ampere GPU Architecture's features.

- PTX ISA

- This guide provides detailed instructions on the use of PTX, a low-level parallel thread execution virtual machine and instruction set architecture (ISA). PTX exposes the GPU as a data-parallel computing device.

- Developer Guide for Optimus

- This document explains how CUDA APIs can be used to query for GPU capabilities in NVIDIA Optimus systems.

- Video Decoder

- NVIDIA Video Decoder (NVCUVID) is deprecated. Instead, use the NVIDIA Video Codec SDK (https://developer.nvidia.com/nvidia-video-codec-sdk).

- PTX Interoperability

- This document shows how to write PTX that is ABI-compliant and interoperable with other CUDA code.

- Inline PTX Assembly

- This document shows how to inline PTX (parallel thread execution) assembly language statements into CUDA code. It describes available assembler statement parameters and constraints, and the document also provides a list of some pitfalls that you may encounter.

- CUDA Occupancy Calculator

- The CUDA Occupancy Calculator allows you to compute the multiprocessor occupancy of a GPU by a given CUDA kernel.

CUDA API References

- CUDA Runtime API

- Fields in structures might appear in order that is different from the order of declaration.

- CUDA Driver API

- Fields in structures might appear in order that is different from the order of declaration.

- CUDA Math API

- The CUDA math API.

- cuBLAS

- The cuBLAS library is an implementation of BLAS (Basic Linear Algebra Subprograms) on top of the NVIDIA CUDA runtime. It allows the user to access the computational resources of NVIDIA Graphical Processing Unit (GPU), but does not auto-parallelize across multiple GPUs.

- NVBLAS

- The NVBLAS library is a multi-GPUs accelerated drop-in BLAS (Basic Linear Algebra Subprograms) built on top of the NVIDIA cuBLAS Library.

- nvJPEG

- The nvJPEG Library provides high-performance GPU accelerated JPEG decoding functionality for image formats commonly used in deep learning and hyperscale multimedia applications.

- cuFFT

- The cuFFT library user guide.

- cuRAND

- The cuRAND library user guide.

- cuSPARSE

- The cuSPARSE library user guide.

- NPP

- NVIDIA NPP is a library of functions for performing CUDA accelerated processing. The initial set of functionality in the library focuses on imaging and video processing and is widely applicable for developers in these areas. NPP will evolve over time to encompass more of the compute heavy tasks in a variety of problem domains. The NPP library is written to maximize flexibility, while maintaining high performance.

- NVRTC (Runtime Compilation)

- NVRTC is a runtime compilation library for CUDA C++. It accepts CUDA C++ source code in character string form and creates handles that can be used to obtain the PTX. The PTX string generated by NVRTC can be loaded by cuModuleLoadData and cuModuleLoadDataEx, and linked with other modules by cuLinkAddData of the CUDA Driver API. This facility can often provide optimizations and performance not possible in a purely offline static compilation.

- Thrust

- The Thrust getting started guide.

- cuSOLVER

- The cuSOLVER library user guide.

PTX Compiler API References

- PTX Compiler APIs

- This guide shows how to compile a PTX program into GPU assembly code using APIs provided by the static PTX Compiler library.

Miscellaneous

- CUDA Samples

- This document contains a complete listing of the code samples that are included with the NVIDIA CUDA Toolkit. It describes each code sample, lists the minimum GPU specification, and provides links to the source code and white papers if available.

- CUDA Demo Suite

- This document describes the demo applications shipped with the CUDA Demo Suite.

- CUDA on WSL

- This guide is intended to help users get started with using NVIDIA CUDA on Windows Subsystem for Linux (WSL 2). The guide covers installation and running CUDA applications and containers in this environment.

- Multi-Instance GPU (MIG)

- This edition of the user guide describes the Multi-Instance GPU feature of the NVIDIA® A100 GPU.

- CUPTI

- The CUPTI-API. The CUDA Profiling Tools Interface (CUPTI) enables the creation of profiling and tracing tools that target CUDA applications.

- Debugger API

- The CUDA debugger API.

- GPUDirect RDMA

- A technology introduced in Kepler-class GPUs and CUDA 5.0, enabling a direct path for communication between the GPU and a third-party peer device on the PCI Express bus when the devices share the same upstream root complex using standard features of PCI Express. This document introduces the technology and describes the steps necessary to enable a GPUDirect RDMA connection to NVIDIA GPUs within the Linux device driver model.

- vGPU

- vGPUs that support CUDA.

Tools

- NVCC

- This is a reference document for nvcc, the CUDA compiler driver. nvcc accepts a range of conventional compiler options, such as for defining macros and include/library paths, and for steering the compilation process.

- CUDA-GDB

- The NVIDIA tool for debugging CUDA applications running on Linux and Mac, providing developers with a mechanism for debugging CUDA applications running on actual hardware. CUDA-GDB is an extension to the x86-64 port of GDB, the GNU Project debugger.

- CUDA-MEMCHECK

- CUDA-MEMCHECK is a suite of run time tools capable of precisely detecting out of bounds and misaligned memory access errors, checking device allocation leaks, reporting hardware errors and identifying shared memory data access hazards.

- Compute Sanitizer

- The user guide for Compute Sanitizer.

- Nsight Eclipse Plugins Installation Guide

- Nsight Eclipse Plugins Installation Guide

- Nsight Eclipse Plugins Edition

- Nsight Eclipse Plugins Edition getting started guide

- Nsight Compute

- The NVIDIA Nsight Compute is the next-generation interactive kernel profiler for CUDA applications. It provides detailed performance metrics and API debugging via a user interface and command line tool.

- Profiler

- This is the guide to the Profiler.

- CUDA Binary Utilities

- The application notes for cuobjdump, nvdisasm, and nvprune.

White Papers

- Floating Point and IEEE 754

- A number of issues related to floating point accuracy and compliance are a frequent source of confusion on both CPUs and GPUs. The purpose of this white paper is to discuss the most common issues related to NVIDIA GPUs and to supplement the documentation in the CUDA C++ Programming Guide.

- Incomplete-LU and Cholesky Preconditioned Iterative Methods

- In this white paper we show how to use the cuSPARSE and cuBLAS libraries to achieve a 2x speedup over CPU in the incomplete-LU and Cholesky preconditioned iterative methods. We focus on the Bi-Conjugate Gradient Stabilized and Conjugate Gradient iterative methods, that can be used to solve large sparse nonsymmetric and symmetric positive definite linear systems, respectively. Also, we comment on the parallel sparse triangular solve, which is an essential building block in these algorithms.

Application Notes

- CUDA for Tegra

- This application note provides an overview of NVIDIA® Tegra® memory architecture and considerations for porting code from a discrete GPU (dGPU) attached to an x86 system to the Tegra® integrated GPU (iGPU). It also discusses EGL interoperability.

Compiler SDK

Download Mac Software

- libNVVM API

- The libNVVM API.

- libdevice User's Guide

- The libdevice library is an LLVM bitcode library that implements common functions for GPU kernels.

- NVVM IR

- NVVM IR is a compiler IR (internal representation) based on the LLVM IR. The NVVM IR is designed to represent GPU compute kernels (for example, CUDA kernels). High-level language front-ends, like the CUDA C compiler front-end, can generate NVVM IR.